Media Manipulation: MLS Action Required

At the 2019 Clareity™ MLS Executive Workshop, we invited Peter Schravemade from BoxBrownie.com to present “Ethical Marketing & Photography,” to help frame the beginnings of the national conversation that is needed to create such policies. Such policies would have implications for listing maintenance and compliance, data standards, IDX and VOW rules, agreements with photographers, as well as data license agreements for listing content sent to third parties.

It is not the purpose of this blog to propose restrictions on media manipulation that might be counter-productive to the selling process. Rather, the focus is to consider how MLSs might help subscribers improve their current marketing practices while reducing their risk, as well as reducing consumer dissatisfaction that may result from undisclosed, possibly deceptive, manipulations.

Media Manipulation Can Be Good - Even Necessary

Peter provided an example of an overexposed listing photo, where important details such as the view out of the window could not be determined. The view may be desirable, like an ocean view, or undesirable, like a sewage treatment plant. He described the common process that professional photographers use, taking multiple photos at different exposures to capture all the important details, then compositing them into a single image to maximize the detail presented. This technique, called “bracketing”, is technically media manipulation, but is entirely ethical and done entirely in the service of providing an image that is most like what one would see with one’s own eyes in the room. In fact, not using bracketing to ensure our hypothetical sewage treatment plant is shown, does not properly represent the listing. There are other basic edits, such as straightening a photo accidentally taken at an angle, that are also clearly not unethical and should be of no concern to us and should not require disclosure.

Types of Media Manipulation

There are several practices that are common and useful in the sales process but may be deceptive, depending on the implementation. Such deception may be intentional or unintentional. For example:

- Image enhancement – The intent of this edit is to return the image to what a buyer might see when visiting the property but which the original photograph may not have captured. For example, a grey sky may be changed to a blue sky to reflect a sunny day. In some areas of the US, and most of the world, it is acceptable to add green grass. Issue: overuse of this type of manipulation - for example, adding grass to an area where it would not or could not normally grow well - can misrepresent the property.

- Twilight conversion – The intent of this edit is to demonstrate the property at a time of day which evokes a positive emotional response in a buyer. It is quite often already commonplace as a manipulation when the photographer has been unable to photograph the property at sunset as desired. Issue: Photographers may change the color of the sky and add a sunset, but sometimes when they do so they put the sun in the wrong location. When this happens, the viewer may think certain rooms will get southern or other exposure when they will not. The viewer may think they can enjoy sunsets from the pool when sunsets would be blocked by the house. This misrepresents the property.

- Item removal – The intent of this edit is to remove clutter that will be removed before the sale / or that is not part of the sale process. Issue: An overzealous editor can easily misrepresent the condition of whatever they imagine lies behind and beneath the clutter. Obviously, editing out undesirable things such as power lines, poor views, and property condition issues, is deceptive and unethical.

- Virtual staging (Item addition) – The intent of this edit is to demonstrate to a purchaser what a space could be by adding photorealistic furniture. When executed well, this is an effective and harmless edit. Issue: If not performed extremely carefully, it is easy to misrepresent the size of the room by adding virtual items that are not in actual proportion to room measurements. Images of light sources that imply a fixture is present where when none is installed would be deceptive. Images of items that would normally convey with the property but are not actually present would be deceptive. If a condition issue is being obscured by the items added, it would also be deceptive.

- Virtual renovation – The intent of this edit is to demonstrate to a purchaser the potential of a property (by, for example, adding a pool), or removing an objection (like adding a kitchen, or renovating an abandoned property) This manipulation removes everything from a room and leaves it looking like it is already prepared for painting and other finishing. Issue: If not disclosed well, it may be misleading if the viewer believes the image is of the actual condition of the room. After all, not only might getting the room to that state be expensive, but in the process of actual room preparation one might find other conditions that increase the cost of actual renovation.

- Renders / CGI / Hybrid CGI – The intention of this edit is to demonstrate what a property might look like before it has even been constructed. Issue: The reality of what is constructed is rarely identical to an artist rendering. If the viewer does not understand that they are looking at an artist’s creation and not present reality, it could be deceptive. This should be disclosed.

Ensuring that media manipulation is disclosed is important for a couple of reasons. Obviously, we do not want to mislead brokers, agents, appraisers or consumers. No one wants to waste time visiting a property that is not in the condition indicated by photos and other media. The accuracy of professional property valuations that depend on manipulated images of the property or comparable properties, could suffer. There may be lawsuits by people who purchase a property without validating the veracity of each listing image. Finally, as we consider a future where computers could use artificial intelligence to create data about a property based on the related media, we would not to accidentally rely on a manipulated image and create incorrect data.

Actions Suggested for the MLS Industry

The MLS industry has a strong interest in the accuracy of listing information, including media. The property should be represented accurately by media, and neither professionals nor the purchaser should not be deceived. Ideally, we should implement a national MLS policy regarding media manipulation that is easy to understand and uses correct terminology so that it is understandable both by real estate professionals and media creators.

The following actions should be considered:

Create an implement a national policy regarding media manipulation. Require disclosure. It must be easy to understand.

Educate MLS subscribers on photography “common sense”, explaining where a technique may be deceptive and explaining their responsibility in vetting the manipulation performed against the property being sold to ensure the image is not deceptive. MLS subscribers should also be taught how to spot media manipulation providers that create deceptive images, intentionally or otherwise, and how to report issues to the MLS. It may be desired to share best practices in establishing contracts with such providers, including the obligation of providers to provide those purchasing their services information about what changes were made to each image, and such that risk regarding accidental or intentional deception is not entirely held by the listing agent and others that use the media. If media manipulation might possibly be deceptive as described above, MLS subscribers need to understand their responsibility to disclose the manipulation.

Create a standard disclosure as a part of the media manipulation policy. Peter Schravemade provided the following language as a starting point for discussion:

Rules regarding the display of such disclosures, in the media themselves as a watermark or displayed prominently in proximity to the media inside the MLS, on IDX/VOWs, and wherever the content is syndicated, should be a part of policy.

Establish RESO data standards for storing and transmitting information about media manipulation. Each type of media manipulation listed above may be an enumeration of the field. Peter suggested an additional enumeration: “A digitally activated fireplace or appliance”.

Once there are policies and data standards related to media manipulation, make changes to the MLS listing maintenance software so this data can be managed.

Consider if and how MLS rules and data license agreements may be amended to protect parties that use the media from risk due to deceptive media manipulation that was missed by the listing agent.

Last Words

Media manipulation has become less expensive and is increasingly commonplace. Each of the types of manipulation described above can be a perfectly legitimate and valuable sales tool - when executed correctly and disclosed. Creating MLS policies and taking the related actions described above should help us maintain professionalism and ethics and reduce risk for those using manipulated images.

MLSs Must Continually Articulate Value

To improve and maintain subscriber satisfaction, multiple listing services must continually articulate the value that they provide, both as an organization and through products and services.

To improve and maintain subscriber satisfaction, multiple listing services must continually articulate the value that they provide, both as an organization and through products and services.In more than half of the MLS strategic planning processes I facilitated over the last year, pre-planning surveys and other research showed most subscribers were unaware of many of the products and services the MLS provides in exchange for their access fees, and less awareness directly correlated to less satisfaction with the value provided for those fees. For some clients, where I performed more detailed research, it was apparent that even when subscribers were aware of a product’s existence, they were not aware of specific features or recent enhancements and their benefits. Raising awareness about the value the MLS provides as an organization and through products and services is difficult, but it can and must be done. Not putting resources into such communications and making sure the effectiveness of those communications is maximized would be penny-wise and pound-foolish.

Value of the MLS

Too many subscribers still think of the MLS as the way to access a database. When it comes to promoting the value of the MLS organization, the “Making the Market Work” campaign released by the Council of MLS back in June of 2017 is still the best resource for MLSs to adopt and promote through all channels. I’m still surprised how many MLSs have not adopted this campaign on an ongoing basis. If you’re an MLS executive, at your next board meeting, try giving your board members a short quiz about the value of MLS – if even they can’t articulate the tenets of confidence, connections, and community to at least some degree, more work is certainly needed.Value of MLS Products and Services

There’s a saying in the industry: “Realtors Don’t Read” or “RDR” for short. I dislike this saying because I have worked with so many professionals over the years and they DO read … if the message is interesting. All too often the headline, Facebook post, or tweet reads something like “[MLS] Releases [Product Name].” If I was a busy professional I wouldn’t click through on that either.Professionals are primarily motivated by four types of benefit-oriented messages:

- This will help you make more money (“profit”)

- This will save you time (“ease”)

- This provides insight into your business (“control”)

- This will reduce risk or prevent you from falling behind (“fear” or “fear of missing out (FOMO)”)

- “Read about the new changes to listing input” will not generate nearly as many click-throughs as “New listing input feature saves you 20 minutes per listing”.

- “Learn more about [product]” is not as effective as “This [MLS] agent closed 18% more transactions this year by using [product].”

- “Sign up for [product] classes” will not generate as many click-throughs as “[Product], offered as an [MLS] benefit, helps you close transactions 15% faster. Click for a 5 minute video with everything you need to know.”

Targeting and Improving Opting

Another important MLS communications trend has been to improve targeting and opting.Still, some MLSs are still not targeting properly and sending every message, even those that would only apply to some subscribers, to every subscriber by email. Or, they present messages that apply to only some subscribers to all subscribers in an MLS post-login popup. If an MLS doesn’t target properly, it’s easy for subscribers to burn out on the quantity of irrelevant messages they receive. Ensuring subscribers are sorted into groups based on role, product and service use, and other factors is key to targeting communications properly. If messages are not targeted, subscribers may decide to opt-out – an MLS communications disaster!

Another problem I still see at some MLSs is that they have a single opt-out – all or nothing. These days, when you try to opt out of most websites, they encourage you to opt out of certain types of communications rather than all types, and MLSs should follow suit. Just the other day, I received a brokerage email about the value of my ex-house and when I clicked to opt-out, it opted me out of communications about that property only. I would have had to navigate the site further to opt out of more than that. Some MLSs say they can’t have a more sophisticated opting system because their marketing system doesn’t provide for it. If that’s the case, it’s time to find a new marketing system.

Continuous Improvement

This article has covered just a few components of how MLSs can improve and maintain subscriber satisfaction by continually articulating the value that they provide, both as an organization and through products and services. Some readers will remember that I literally spent hours at the 2014 Clareity MLS Executive Workshop providing a more comprehensive review of communications best practices – it’s a huge topic! Still, with just a bit of planning, by taking more care to use benefit-oriented language with subscribers to articulate the value of what the MLS provides, and by improving targeting and opting methods, MLSs can dramatically improve subscriber engagement and satisfaction over time.MLS Products & Services: From Principle to Practice

The NAR Approach

NAR has created three categories, basically:

- CORE = MLS MUST provide

- BASIC = MLS MAY (include)

- OPTIONAL = MLS MAY (offer, a la carte)

CORE

This includes information, services, and products are essential to the effective functioning of MLS, as defined, and include current listing information and information communicating compensation to potential cooperating brokers.

But how is MLS defined by NAR?

- a facility for the orderly correlation and dissemination of listing information so participants may better serve their clients and customers and the public,

- a means by which authorized participants make blanket unilateral offers of compensation to other participants (acting as subagents, buyer agents, or in other agency or non-agency capacities defined by law),

- a means of enhancing cooperation among participants,

- a means by which information is accumulated and disseminated to enable authorized, participants to prepare appraisals, analyses, and other valuations of real property for bona fide clients and customers, and

- a means by which participants engaging in real estate appraisal contribute to common databases.

The phrase "listing information" seems too limited, given all the kinds of information resources MLS subscribers expect these days, the types of information being standardized at RESO, and all the types of data needed for a core MLS system or database to interoperate with all of the various tech tools in use by MLS subscribers. I would suggest that perhaps the definition of MLS could use a little updating by eliminating the word "listing" rather than trying to create some kind of all-inclusive list.

BASIC

This is determined locally and provided automatically or on a discretionary basis, and includes items such as: sold and comparable information, pending sales information, expired listings and “off market” information, tax records, zoning records/information, title/abstract information, mortgage information, amortization schedules, mapping capabilities, statistical information, public accommodation information, MLS computer training/orientation, and access to affinity programs.

Some brokers and broker groups have declared many things out of scope for MLS: agent websites, CRM, property marketing tools, showing systems, transaction management systems, and MLS public-facing websites. One large group complained loudly a few years ago about MLSs pushing NAR to add as many items as possible to the list of ‘basic’ MLS functions to force participants to pay for them, whether they want them or intend to use them or not. Since that time it was determined that in-person training could not be mandated - clearly things are in flux.

But it seems obvious that showing systems could be considered critical infrastructure for efficient cooperation. The case for inclusion could be made for other items on the brokers' list as well. How do we know who’s right, and what belongs on that CORE and BASIC list and what doesn't? I do NOT think we should be evaluating the distinction between these lists to serve the interests of the "lowest common denominator" of MLSs OR go wild adding items to the list willy-nilly. I DO think we need to apply some additional principles and I'll come back to that.

OPTIONAL

An MLS may not require a participant to use, participate in, or pay for the following optional information, services, or products: lock box equipment including lock boxes (manual or electronic), combination lock boxes, mechanical keys, and electronic programmers or keycards; advertising or access to advertising (whether print or electronic), including classified advertising, homes-type publications, electronic compilations, including Internet home pages or web sites, etc.

Do Not Pass Go...

Those of you who attended the 2012 Clareity MLS Executive Workshop probably remember the cautionary note about anti-trust tying violations - how easy it is to get into trouble if one creates an illegal tie between one product (e.g. membership / subscription) and another when expanding a product offering. I won't dive into the details here, but I encourage readers to remember the four elements of such a tie:

- There must be two separate products;

- There must be a tie;

- The seller must have enough power in the market for the typing product so that it can impact trade in the market for the tied product; and

- A certain amount of sales for the tied product must actually be impacted by the tie.

I'm insistent that we must continue to re-evaluate the definition of MLS precisely because defining the product set that reflects the function of MLS (versus a separate product) is such a core part of the testing.

Another Approach: Principle

What are the principles by which MLS information, services, and products belong in the categories of core, basic, and optional? I not only believe we must more clearly define MLS but also clearly define the principles that are considered when evaluating the categorization of products.

Each bullet point in the definition of MLS services effectively spells out a core principle, which is a test for how appropriate it is for a product or service to be included in the basic MLS package. In short, at least given the current MLS definition:

A. Manage/Disseminate info so participants better serve clients, customers, and public.

B. Means for compensation offer

C. Enhance participant cooperation

D. Participants: appraisals, analysis, valuation for clients

E. Participant appraisals

But let's look at some other elements for consideration:

Principle 1. Network Power. Does the product or service require many or all MLS subscribers to use it to achieve benefits from it? Professional collaboration tools (i.e. transaction management and showing systems) would fall under this principle, unless they interoperate sufficiently that collaboration can occur without everyone using the same system.

Principle 2. Economic feasibility. Does the product or service help participants better serve their clients but is it economically or otherwise infeasible for any one participant to field the product or service on their own?

Principle 3. Integration. Does the product or service require a level of integration into core systems that would not be feasible from an economic and/or interface perspective if every broker or agent selected their own? Note that ability to integrate continues to evolve.

Principle 4. Economic Interest. Is there an overarching subscriber economic interest?

Note that principles 1-4 help to refine consideration and categorization of items already considered relative to A-E (or an updated MLS definition that drives a different A-E). And, of course, all has to be considered against the potential for creating an illegal tie.

During my presentation at the Clareity MLS Executive Workshop we considered a number of product examples and evaluated them against 1-4 and A-E. That's the approach I'm suggesting MLS leadership take as they are approached with "shiny objects".

Additional MLS Considerations

An MLS is unlikely to go through a process of product evaluation unless the product appeals to subscribers, that is, it fills a subscriber need. But choices must also be made based on whether the product is strategic for the MLS and its subscribers in some manner, how important and urgent it might be for the MLS to field the product at that time and, of course, cost. Also, MLSs typically have limited capacity to roll out new products and continually encourage adoption of those products - again, choices must be made.

Deciding what to do when a product or service is not as well adopted as desired, or if there is dissatisfaction with it, is a topic for another blog, another day.

Next Steps for the Industry

I would suggest that industry leaders collaborate on the following:

- Consider how we might modernize the definition of MLS (perhaps beyond just fixing reference to “listings”). Think about what we aspirationally want the MLS industry to become - again, a subject for another post.

- Refine core (and basic) MLS services as a standard to reflect that new definition. Phase-in over time to allow MLSs to determine strategy for coming up to snuff (on their own or together).

- Add four additional principles to the section of MLS policy relating to service characterization (core, basic, optional)

- Remove lists from policy and put them in a “best practices” document explaining how each product/service (and new ones) relate to definition and principles.

- Run it all by anti-trust attorneys!

Strategic MLS Issues for 2019

Expand what cooperation via MLS means. If the perception of MLS is just about a place to advertise the homes with some private fields, MLS is very vulnerable. If we make it more explicit that cooperation is about a lot more than that, the MLS can grow stronger.

To accomplish that, we can:

- Develop standards for compliance and enforcement: data standards and business rules, data distribution, maintaining "fair" online advertising using the compilation (IDX / VOW) and other uses.

- Develop core standards for MLS data integrity business rules.

- Consider the kind of government regulation the industry could be facing with regard to wire fraud and get ahead of it - MLS can be a part of that, if organized. Information security practices will be a part of that - much of the steps needed to deal with wire fraud take place during the "cooperation" phase where the MLS is, or could be, involved.

- Develop and implement standards for brokers and agents ("With teeth") re: responsiveness to showing requests, participation in secure electronic communications and transaction management. Develop these as MLS monitored areas with supported core functions as needed. As elements of cooperation, these would seem to fall within the jurisdiction of MLS. What other areas, technological and otherwise, could be considered "cooperation" and be an MLS function?

There are many challenges ahead for MLS - I think it would be ideal to firm up its value and capabilities in this area.

Consolidation: A unified industry would be more capable of managing risk.

To encourage consolidation, we could:

- Develop and mandate core standards for MLS, based on CMLS best practices.

- Drive recognition of strategic issues driving consolidation BEYOND the local service needs and Overlapping Market Disorder (OMD) - for example, managing risks described in the DANGER report, information security, and legal challenges (without depending on subsidy from the national level). Positioning based on OMD alone was unfortunate because that is only one of the drivers for consolidation.

- Determine the MLS consolidation end-game (per my earlier blog on the subject). When I speak on the subject or consolidation or am helping MLSs achieve it, I create a map based on consumer-oriented data that allows us to productively discuss the end-game. This market-driven endgame map should drive tactics to achieve consolidation, driving them based on consumer needs and the professional access needed to serve the consumer rather than existing industry structures. Add the other broker and core standards factors and we should have a better picture of the end-game we are aiming for.

- NAR itself could get the right people from each organization sit down together to work consolidation out. Peer to peer asks have had some effectiveness, but progress is slow. Not everyone comes to MLS conferences like the Council of MLS or the Clareity MLS Executive Workshop to understand why consolidation is so important.

- Some of the states have, in the past, worked against consolidation - this must be discussed at the national level. Shared services at the state level solve some problems, but are stopping others from being solved.

Front End of Choice

Most MLSs are not ready to unbundle for FEoC: providing a core "pipe" of information and allowing for product choice, including products provided through the MLS organization, through brokerages, and purchased by agents themselves. Though I don't think this is an especially important trend to move forward - other others described above are much more urgent - I don't think this trend is going to go away. If the industry is going to support this trend, potentially incompatible licensing processes and the per-user cost bundles MLSs have created will need to be addressed. A few large MLSs (MRIS, CRMLS, Northstar MLS) developed the technical infrastructure to support this - and it is not easy! Others have focused on providing FEoC for alternate means of MLS data access while providing a single core MLS system. Once further data standards are created that are needed to support this type of business, there may be increasing competition for this core infrastructure.

Now, brokers are dealing with a similar issue today, with some deploying suites but many more deploying best of breed products, integrated as best they can without data standards. While many brokers are happier when they create this best of breed solution, but it's expensive and difficult because of that lack of data standards. There's more inertia in the MLS space to stick with the single-vendor suite plus a few integrated products approach due to licensing models, but data standards in the MLS space are more advanced than in the broker space and alternate front ends are starting to emerge - so I expect this is going to happen. Again, "How urgent and important is this?" is a question that needs to be asked when considering this initiative - and the answer is not going to be the same for every MLS.

Other Issues

Every MLS has different issues to address in strategic planning. For example, in the last few plans I have facilitated in 2018, "Front End of Choice" didn't come up at all from subscribers or the leadership, while the other two issues did in one form or another - along with other local issues. Some of those local issues are ones I've seen recently in several organizations and could well be covered in another blog post.

Security Auditing compared with Penetration Testing

The short explanation is that a “penetration test” is just one small component of a “security audit,” and that the software / service provider was aiming for a much lower bar than we were aiming for on behalf of the executive and her MLS. Following is an explanation of how much lower it is.

A penetration test is an attempt to find weaknesses in the defenses in a computer system’s security. Sometimes the term is used in reference to non-computer security, but typically not. The test usually consists of a combination of automated and manual testing to find specific attacks that will enable the attacker to bypass certain defenses, then working to find additional vulnerabilities that can be found only once those initial defenses are defeated. As a result of this testing, risk can theoretically be assessed and a remediation plan put in place. One can also evaluate how well an organization detects and responds to an attack.

One significant problem with penetration testing is that, if the system being tested has good outer defenses, a penetration test may not find security risks lurking inside of the defenses. It may then present as its finding that risk is low and no action is needed. Then when there is a change to the outer defense that causes a vulnerability (or a vulnerability is discovered in that outer defense) actual attackers will breach all of the inner layers where security has not been well designed. It’s a well-known principle of security design that one designs multiple levels of security (a/k/a “defense in depth”). Penetration testing, on its own, doesn’t reliably measure whether this has been done properly.

For the more technical readers, I will provide an example:

The other problem with using a penetration test as the sole way to measure security is that information security is a much broader area of exploration, normally measured during a full security audit. Most of the breaches we’ve had in this industry have been the result of weak policy and procedure or the contracts that reflect those policies, inadequate human resources practices, and physical security issues. Still other technical security issues have resulted from a lack of protection against screen scraping, and yet others from authentication related issues – both items that most penetration testing tools cannot easily uncover. Looking at the security configuration of network equipment, servers and workstation operating systems, platforms, and installed software, mobile devices, antivirus, printer and copier configuration (yes, I really said copiers!), password selection, backup practices and so much more – all of that falls outside the purview of typical penetration testing and is only reliably addressed via a full security audit.

There’s nothing wrong with using a penetration test as a part of a security audit – just don’t mistake the part for the whole.

Report from Clareity’s 17th Annual MLS Executive Workshop

Clareity’s annual MLS Executive Workshop took place in Scottsdale, Arizona and, as it has every year, the event was sold out. Responding to our post-event survey, MLS executives made comments like:

- “Great event this year. Always the best networking.”

- “Informative and insightful. Can’t wait for next year’s workshop.”

- “As always – groundbreaking and “explode your brain” wonderful!”

- “For me, this meeting truly does start the conversation for the year.”

- “Love the size. Good content, all substance.”

- “This was the best MLS Workshop ever.”

Here are some takeaways from the Workshop:

Welcome / State of Industry

Gregg Larson shared observations from 2017 and an outlook for 2018 and beyond. This included a broad roundup of consumer technologies, covering the MLS and tech vendor merger and acquisition trend, and focusing on new brokerage models and what they could mean for traditional brokers and the MLS. While many in the industry describe these brokers from a point of fear, Gregg’s focus was more about how these companies seek to meet consumer needs and how we all can adjust to industry change based on those needs. Later in the Workshop, Clareity gave both Redfin and OpenDoor an opportunity to explain how they work well within the MLS community – more on that later in this report. Gregg also thanked the sponsors that let us run a quality show at a low cost for attendees.

Major Brokers Demand Better MLS Security

HomeServices of America is advocating for vendors to adopt more stringent security measures – including MLSs. HomeServices’ CIO, Alon Chaver talked about how they are beginning to work with several MLSs on this and how they intend to expand on that effort with other MLSs. Clareity’s been beating the security drum for over twenty years now, so we welcome HomeServices to the effort.

Alon’s takeaways for MLSs:

Minimize outbound data distribution- provide only the necessary data, data sets and data fields required to perform the contracted services. Require contractual assurances at contract renewal – commitment from vendor(s) to secure the data they receive. That includes not sharing data with third parties unless authorized and vetted for security, minimizing programmatic access via APIs, requiring breach notifications, insurance coverage and indemnity provisions.

If your organization hasn’t yet begun an organizational security program or just wants a fresh set of eyes on your security practices, please contact Clareity’s Matt Cohen to discuss moving forward.

Managing Compliance for the new Mandatory Waiver Policy

Carolina MLS has had a waiver policy in place for a while now. Steve Byrd explained the various conditions where his MLS allows waivers, the scope of waiver use, and their 3-part process for catching “cheaters”:

- Using Listing Data Checker software to look for co-listing violations. Since subscribers are not allowed to co-list with non-subscribers, they use the tool to search for keywords such as “co-listed” and related terms as well as listings with an email address, web URL or phone number in the Public, Agent and Company Remarks, or Directions field that might be a sign of co-listing.

- Clareity’s SAFEMLS + RISK product works as a constant deterrent. Nonetheless, using the tool Carolina MLS issued 19 notices/warnings to subscribers for password sharing and unauthorized use of the MLS and issued four significant fines for password sharing.

- Reports by Agents. When agents ask what to do if the selling agent is not an MLS subscriber or can’t find that agent in the roster, the MLS investigates. Carolina MLS has fined and back-billed six times since 2013.

Leadership Lessons

In a session facilitated by Denee Evans, Two CMLX3 graduates, Colette Stevenson and Stan Martin, shared leadership lessons learned from their CMLX3 experience. There’s no way to sum up such a complex conversation easily, but one of the most interesting parts of the session was when Colette and Stan talked about learning about their strengths and weaknesses as a part of the process and how they improved their management capabilities as a result.

Blockchain: What does it mean for the MLS?

NAR / CRT’s David Conroy explored Blockchains, which provide a verifiable and trustworthy record of events or transactions, and David showed how this new technology could evolve to:

Improve property records

Greatly reduce cost of business for all parties

Reduce risk in real estate transactions

Help buyers & sellers get to closing table faster

Standards Evolution Supporting New Innovations

CRMLS’s Art Carter presented information about the growth of RESO, casestudies from myTheo, Homes.com, and other demonstrations of RESO successes, as well as highlight videos from RESO’s DataComp event and how standards evolution is supporting true innovation in the real estate technology space. In the myTheo example, that company reduced product time to market from 6-7 weeks down to 3-4 weeks and reduced staffing resources needed to launch in a new MLS market by 30-40% – all by using a certified RESO feed.

MLS Copyright and the “Spark” of Creativity

Matt Cohen moderated a panel including Mitch Skinner, Claude Szyfer, and Brad Bjelke to discuss the copyright office re-evaluation of whether MLSs can copyright the compilation based on “creativity”. A status update on the copyright office discussions and NAR’s role in them was provided. In an especially fun part of the session, Brad role-played making arguments on behalf of the copyright office while Mitch and Claude argued against him in an adversarial fashion. We discussed what MLSs could do to increase the creativity of the compilation. We also discussed whether use of data standards (common field names and enumerations) could reduce creativity of MLS compilations and cause issues – the answer to which is “yes, at least some” – but that can’t get in the way of data standards adoption and there are lots of other ways these compilations are creative. We need to better demonstrate just how creative they are to the government.

Future of IDX and VOW

Matt Cohen, homes.com’s Andy Woolley, and Fantis Group Real Estate & Clientopoly’s Tony Fantis talked about the myriad issues of the current IDX and VOW policies, and presented some visions of how policy could be changed to allow brokers and their vendors to provide more innovative uses of IDX/VOW data. One vision was very large in scope but evolutionary, while the other vision was more revolutionary. The reasons for each approach and the pros and cons of each was discussed. We hope those in the audience on relevant NAR committees – and those that influence those committees – will pick up the ball and run with it.

Should MLSs be Supporting Successful Agents?

Xplode’s Matt Fagioli presented a vision of how agents will be successful going forward with technology and what MLSs could be doing to support them. Many tools were discussed, but some MLSs said there was one big takeaway for them: figuring out how to help their agents take advantage of Instagram, since according to a 2017 Forrester report, Instagram has a 2.2 percent per-follower interaction rate vs Facebook at only 0.22 percent.

MLS Consolidation

T3 Sixty’s Kevin McQueen gave the audience some interesting statistics to think about: we’re down to 677 MLS organizations: 88 Regional MLS serving 80% of REALTORS® and 20% served by the remaining 600 or so MLSs. Kevin described how a useful tactic to initiate discussion is to take inventory – looking at duplicate listings, subscribers, and listing agents, and quantifying the waste of the inefficiency – putting a dollar figure on it that makes sense to stakeholders. He suggested that the important thing to do to get the ball rolling on consolidation is to get groups in the room together – sometimes with state association leadership, with 2-3 larger MLSs (not just one “gorilla”), and involving brokers. Kevin suggested we may want to focus on the most severely overlapping markets, especially the nine states containing over 350 MLSs.

The Future of Brokerage and What It Means for MLS

Rob Hahn & Sunny Lake Hahn ended day one with an entertaining look at brokerage. They described a barely profitable traditional brokerage model, the increasing pressures brought by agent teams that “own the kitchen table” where the relationship with consumers is formed, additional pressures brought by 100% commission shops, and even more pressure being brought by technology brokerages. They described a potential future for brokerages being run like a professional services firm, with equity partners and employee associates, and a future where there may be fewer brokerages and agents. Perhaps MLSs will need to establish a different financial model to address shrinking membership, and MLSs may need to continue to evolve policy with relation to teams. In summary, Rob and Sunny exhorted MLSs to stop fighting, understand the pain brokerages are experiencing, and help “save them.”

A Tragedy of the Commons

Redfin’s Chelsea Goyer presented Redfin’s pro-MLS point of view, countering a “think tank” article that invoked Redfin’s name and painted MLSs negatively. She talked about an article she and Glenn Kelman had written about this called “A Tragedy of the Commons”. According to Wikipedia, “The tragedy of the commons is a term used in social science to describe a situation in a shared-resource system where individual users acting independently according to their own self-interest behave contrary to the common good of all users by depleting or spoiling that resource through their collective action.” In the view published by Chelsea and Glenn, it’s important for brokers to support the shared-resource system that is MLS. Chelsea also talked about the need for MLSs to consider how membership should be (and feel like) a privilege, how MLSs could be more transparent, how it can be modernized, how important data standards are, and how MLS consolidation should make things better for brokerages. This was a great session!

Zillow’s Listing Ecosystem

From listing input to listing distribution, Zillow’s Errol Samuelson described how they work within existing MLS infrastructures. The Bridge Interactive API provides RESO platinum certification including the data dictionary and additional fields and extended datasets. He demonstrated a management interface with a great design, and reporting capabilities. Errol also explained how the solution could be used to not only manage data distribution from a single MLS, but also to “bridge” multiple MLSs into a single feed for brokers and their vendors. He also showed a mobile-friendly listing input system that complies with MLS business rules. This solution is live in Atlanta, and coming soon to Rhode Island, Huntsville, Boston, and Oakland / Berkeley.

Personas as a Way of Better Understanding Subscribers

MLSListings’s Dave Wetzel presented a different approach to understanding subscribers better using personas. A persona is a fictional character who embodies certain essential characteristics, such as attitudes, goals, and behaviors, of a particular subset of the users of a product or service. Personas are constructed using sample data collected from actual users. Constructing personas creates internal focus and understanding of your users across your teams and organization. They help internal teams empathize with users, including their behaviors, goals, and expectations. They help the company talk in terms of user needs, and they support better decision making where users are concerned. Dave described the five personas they identified in their MLS and suggested other MLSs do similar research to create their own personas to guide their efforts.

Managing the DANGERs and Other Risks

While managing risk is an important business function, many MLSs feel the risks we face are too big to manage. Matt Cohen helped explore what can MLSs do about the DANGERs (from the NAR D.A.N.G.E.R. Report) and other risks, as discussed in “MLS 2020 Agenda”. Some risks are too much for smaller MLSs to manage – which is one reason MLS consolidation is important. Some risks may even be too large for larger regional MLSs, and may take cooperation to address. The session covered five risks, re-evaluating them for probability, timing, and impact, and providing an initial set of risk mitigations for discussion. For example, mitigations for the information security risk include:

- Security Assessment & Remediation

- Strong Authentication

- Anti-Scraping (MLS resources)

- Anti-scraping (IDX requirement)

- API Security

Homesnap (Broker Public Portal)

Gregg interviewed Guy Wolcott, the Founder of Homesnap. He asked probing questions about measuring success of the effort, and about how the company plans to achieve greater success in the future.

How to Capture, Communicate and Close in today’s “On Demand” World

Realtor.com’s Bob Evans described Realsuite, their new product which includes “Respond”, which quickly delivers responses to client inquiries, “Connect”, which provides a contact management system and includes market data reports, and “Transact”, which organizes documents and tasks and includes form integration and electronic signatures.

Power of the Network and Site Licenses

Matt Cohen moderated a panel including Lone Wolf / Instanet Solutions’ Joe Kazzoun, Showing Time’s Michael Lane, Real Safe Agent’s Lee Goldstein and CSS’s Kevin Hughes. Panelists described the conditions under which products are optimally site licensed, versus “a la carte” licensing or provided as one of several choices. Each described the benefits of site licensing for their product, and the panel discussed the hybrid model of licensing core features but upselling additional capabilities to individual users. Finally, we discussed data standards and how companies may choose to share data – or not share it – with business partners, competitors, and the consumers. While standards make it possible to move data more easily, business, legal and privacy issues all affect whether data will be shared.

OpenDoor.com

Gregg Larson interviewed Kerry Melcher, GM from OpenDoor.com, the original and leading iBuyer in the country. The way their brokerage works is that sellers request an offer, the brokerage creates an offer to buy the property itself – rather than trying to find a buyer to buy the property immediately. If the seller is interested the brokerage then conducts a home assessment and, if repairs are needed the seller can make the repairs or deduct costs from the offer and the brokerage will make the repairs. Payment then happens in just a few days. OpenDoor then maintains the property and finds a buyer. As discussed during the session, it’s important to note that the company buys at retail and sells at retail – this is not about buying low and flipping homes. OpenDoor works with buyers too. Buyers can use their app to gain access to the homes they have for sale, and every home comes with a 30-day satisfaction guarantee.

While a lot of people are afraid of the change that iBuyers might bring to the industry, Kerry made it clear that they work cooperatively with other agents all the time and conform to MLS rules. Also, by making the transaction easier for consumers, they believe their approach will result in more transactions and more money for the real estate industry overall.

Final Words

It’s “business as usual” at Clareity. If you want more information on our professional services (strategic planning, MLS regionalization, public speaking, security audits, etc.), please contact Matt Cohen or Gregg Larson. If you want information about Clareity’s security and SSO products, please contact Troy Rech.

Clareity packed a lot of perspectives and content into a ton of sessions over a day and a half – but the Workshop is about more than content – it’s about relationship building. We’ve listened to those attendees with ideas of how to make the event even better – besides the meals together and fun outings on arrival day, the longer breaks have improved the networking possible during the event. Over the past 17 years, MLS executives and their guests have enjoyed our event which, we’ve heard in post-event surveys, is “just the right size” and “full of takeaways.” We promise to continue to improve the Workshop based on attendee feedback.

Thank you for your support!

Real Estate Association APIs and Data Standards

Association management systems have traditionally been silos where most information about the member cannot easily be used to provide a custom per-member experience and solve other business problems. Also, many associations want to use “best of breed” solutions to provide service to members, and the lack of integration with association management systems has made that difficult or limited.

Association management systems have traditionally been silos where most information about the member cannot easily be used to provide a custom per-member experience and solve other business problems. Also, many associations want to use “best of breed” solutions to provide service to members, and the lack of integration with association management systems has made that difficult or limited.This paper is a starting point for addressing wider industry awareness of existing AMS APIs and what can be accomplished by using them, especially if associations show demand and a developer community can be organized.

This paper will also serve as a starting point for discussing possible business objectives for the APIs – important for assessing whether the APIs under development properly support those objectives.

Finally, this paper should inspire both business and technical experts to engage with the AMS providers at RESO to create a data dictionary of field names that should be common to all AMS and create a common mechanism for accessing that member information.

You can download the paper here: Real Estate Association APIs and Data Standards

Preventing Screen Scraping: Policy, Contracts and Technology Evaluation

If policies and contracts do not contain specific anti-scraping technology requirements, one can easily end up in an argument over whether the steps taken to prevent scraping are sufficient, even if those steps are demonstrably ineffective. For example, a website provider might implement a

“CAPTCHA” on login and say, “This should be enough to prove the humanity of the user. It’s not a computer program using the website.” But, not only are many CAPTCHA tests easy for computers to defeat (it’s an arm’s race!), but if all a data pirate needs to do is have a human being log in and/or complete a CAPTCHA test once per day and have the cookie (containing session information) captured by a computer for use in scraping data, it’s not a very high barrier. Likewise, an anti-scraping solution might block an IP address as being used by a scraper if the website gets more than 20 or 30 information requests per minute from that address – and while that seems like a reasonable step, these days the more advanced scrapers spin up a hundred servers on different IP addresses and have each of them grab the data from just a few pages, then move those servers to different IP addresses. Thus, anti-scraping is difficult, and while the mechanisms mentioned above might play a part in a solution, one must include a more comprehensive solution if one wishes to actually have a reasonable chance of stopping the screen scrapers. Moreover, that more comprehensive solution should be detailed explicitly both in the terms of any contracts executed by the defending organization, as well as in any policies implemented by them regarding reasonable security measures.

Anti-scraping requirements might look something like the following:

The display (website, app’s API) must implement technology that prevents, detects, and proactively mitigates scraping. This means implementing an effective combination of the countermeasures defined in the “OWASP Automated Threat Handbook” “Figure 7: Automated Threat Countermeasure Classes” (reproduced below and available at

https://www.owasp.org/index.php/OWASP_Automated_Threats_to_Web_Applications). Those countermeasures must be demonstrably effective against commercial scraping services as well as advanced and evolving scraping techniques.

The anti-scraping solution must be comprised of multiple countermeasures in all three classes of countermeasures (prevent, detect, and recover) as defined by OWASP, sufficient to address all aspects of the security threat model, including at least complete implementations of all of the following: Fingerprinting, Reputation, Rate, Monitoring, and Instrumentation.

Those fielding displays and APIs requiring anti-scraping technology must demonstrate compliance with the above requirements using technology they have built or a commercial product/service. It must be demonstrated that the technology meets those requirements and that it has been properly configured to effectively address scraping.

Following is some more detail about each countermeasure:

Countermeasure | Brief Description | Prevent | Detect | Recover (Mitigate) |

Requirements | Define relevant threats and assess effects on site’s performance toward business objectives | X | X | X |

Obfuscation | Hide assets, add overhead to screen scraping and hinder theft of assets | X |

|

|

Fingerprinting | Identify automated usage by user agent string, HTTP request format, and/or devices fingerprint content | X | X |

|

Reputation | Use reputation analysis of user identity, user behavior, resources accessed, not accessed, or repeatedly accessed | X | X |

|

Rate | Limit number and/or rate of usage per user, IP address/range, device ID / fingerprint, etc. | X | X |

|

Monitoring | Monitor errors, anomalies, function usage/sequencing, and provide alerting and/or monitoring dashboard |

| X |

|

Instrumentation | Perform real-time attack detection and automated response | X | X | X |

Response | Use incident data to feed back into adjustments to countermeasures (e.g. requirements, testing, monitoring) | X |

| X |

Sharing | Share fingerprints and bot detection signals across infrastructure and clients | X | X |

|

The appendix that follows provides more thorough OWASP countermeasure definitions.

Appendix: OWASP Countermeasure Definitions

The following is excerpted from “OWASP Automated Threat Handbook Web Applications”:

• Requirements. Identify relevant automated threats in security risk assessment and assess effects of alternative countermeasures on functionality usability and accessibility. Use this to then define additional application development and deployment requirements.

• Obfuscation. Hinder automated attacks by dynamically changing URLs, field names and content, or limiting access to indexing data, or adding extra headers/fields dynamically, or converting data into images, or adding page and session-specific tokens.

• Fingerprinting. Identification and restriction of automated usage by automation identification techniques, including utilization of user agent string, and/or HTTP request format (e.g. header ordering), and/or HTTP header anomalies (e.g. HTTP protocol, header inconsistencies), dynamic injections, and/or device fingerprint content to determine whether a user is likely to be a human or not. As a result of these countermeasures, for example, browsers automated via tools such as Selenium must certainly be blocked. The technology should use machine learning or behavioral analysis utilized to detect automation patterns and adapt to the evolving threat on an ongoing basis.

• Reputation. Identification and restriction of automated usage by utilizing reputation analysis of user identity (e.g. web browser fingerprint, device fingerprint, username, session, IP address/range/geolocation), and/or user behavior (e.g. previous site, entry point, time of day, rate of requests, rate of new session generation, paths through application), and/or types of resources accessed (e.g. static vs dynamic, invisible/ hidden links, robots.txt file, paths excluded in robots.txt, honey trap resources, cache-defined resources), and/or types of resources not accessed (e.g. JavaScript generated links), and/ or types of resources repeatedly accessed. As a result of these countermeasures, for example, known commercial scraping tools and the use of data center IP addresses must certainly be identified and blocked.

• Rate. Set upper and/or lower limits and/or trend thresholds, and limit number and/or rate of usage per user, per group of users, per IP address/range, and per device ID/fingerprint. Note that this kind of countermeasure cannot stand alone as hackers commonly utilize a slow crawl from many rotating IP addresses that can simulate the activity of legitimate users. Monitoring. Monitor errors, anomalies, function usage/sequencing, and provide alerting and/or monitoring dashboard.

• Instrumentation. Build in application-wide instrumentation to perform real-time attack detection and automated response including locking users out, blocking, delaying, changing behavior, altering capacity/capability, enhanced identity authentication, CAPTCHA, penalty box, or other technique needed to ensure that automated attacks are unsuccessful. Response. Define actions in an incident response plan for various automated attack scenarios. Consider automated responses once an attack is detected. Consider using actual incident data to feed back into other countermeasures (e.g. Requirements, Testing, Monitoring).

• Sharing. Share information about automated attacks, such as IP addresses or known violator device fingerprints, with others in same sector, with trade organizations, and with national CERTs.

Computer Vision and Improving Real Estate Search

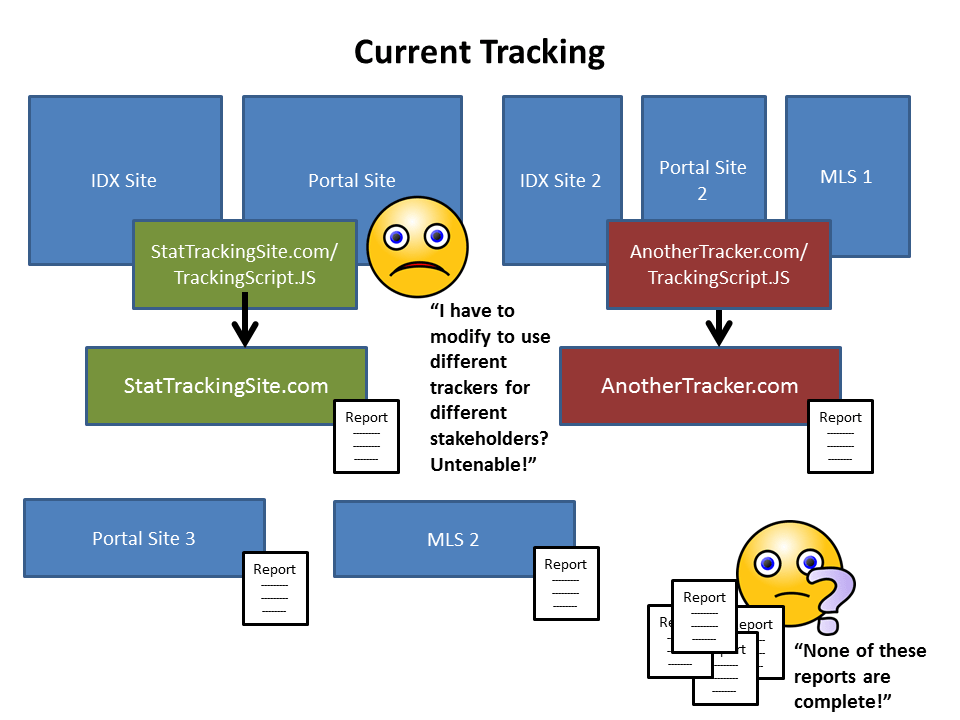

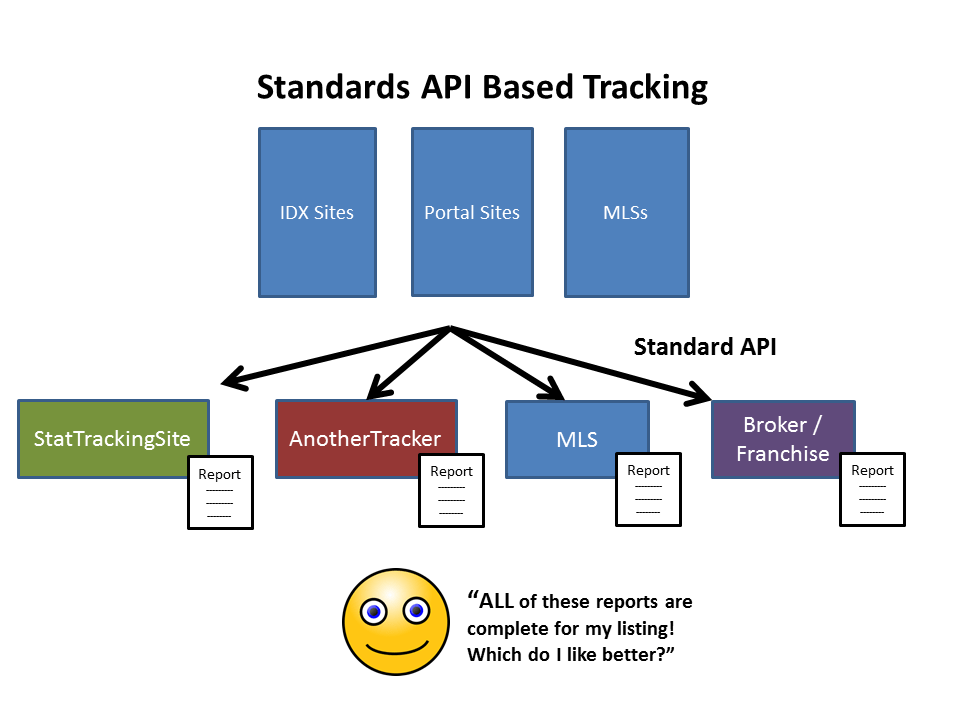

Last week I was on a panel at the RESO conference where we talked about software personalization

as an important trend. Back in 2008, I wrote some articles about improving prospecting and real

estate, one aspect of which was that we needed to get smarter about understanding consumer preferences so that consumers don’t have to page through so many listings or can at least see the most likely matches to their interests first. As I noted in that article, the tricky part was that “there are various qualitative aspects of property selection that we don’t currently track data for at the current time.” That’s where “computer vision”, a technology that is becoming both more robust and more common, could make a difference.

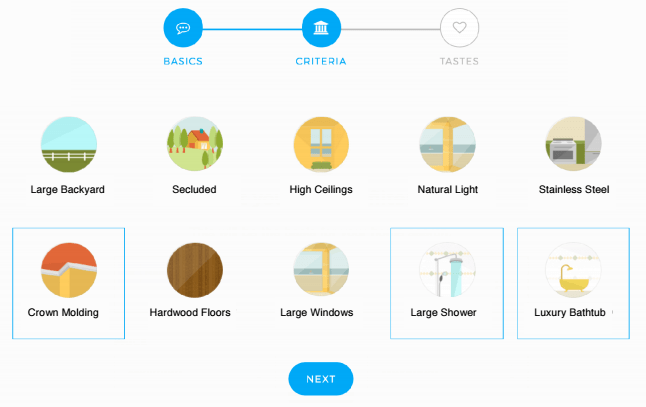

“Computer vision” is the ability of a computer to analyze photos and create data out of them. Imagine a consumer likes open floor plans, modern kitchens, wide driveways, high ceilings, mature trees, or lots of natural light – those are all things a consumer might mention when describing their dream home, but little of that information is reliably tracked by agents in most MLSs. With computer vision, that data could be extracted from the listing photos by a computer as keywords by which listings could be searched – without having to manually sort through many homes and many more photos.

I recently saw an example of computer vision demonstrated by RealScout (not a Clareity client) that I was impressed by. I don’t think any company has fully leveraged the potential of computer vision and created the “perfect” product with it, but this company had clearly made some real progress on applying computer vision to real estate search. During the demonstration they showed how they could automatically tag photos, so a consumer could, for instance, page through just the kitchen photos of multiple listings – even if the photos weren’t labeled “kitchen” by the MLS. The technology enables searches for normally unsearchable criteria, and to compare images for key features and rooms side-by-side and roomby-room, as illustrated below:

We’ve watched computer vision evolve over the past fifteen years or so – Google’s image search was launched in 2001 and has continued to get more sophisticated, and there are many other companies outside our industry that specialize in it. RealScout is not the only company using computer vision in real estate – during their demonstration they showed how Trulia has used it, and I’ve seen others explore this area over the past few years though not always release the resulting products. There are also quite a number of companies outside of our industry that license computer vision technology – of course, it would have to be optimized for real estate use. And there are some limitations to what the technology can do at this point, especially where listing photos are limited. No one wants their client to miss out on a home because the pictures didn’t highlight a certain feature. That said, this technology – if used artfully – can certainly augment existing listing search technologies and create a compelling user experience.

I have no doubts that our industry will continue to evaluate how to create great listing entry and search experiences using computer vision, and that the number of products – both existing and new –leveraging this technology will grow over time. This is certainly an area to keep an eye on.I have no doubts that our industry will continue to evaluate how to create a great listing search experience using computer vision, and that the number of products – both existing and new –leveraging this technology will grow over time. This is certainly an area to keep an eye on.

Reducing the Risk of Real Estate Wire Fraud

The Groundbreaking Definitive Paper on the Subject

Ongoing bad publicity related to real estate transaction wire fraud threatens to damage the reputation of the real estate industry. Previous issue descriptions have been incomplete and have also not provided thorough guidance for those brokerages, title companies, attorneys, and others that engage internally in the wiring of funds or interact with the consumer regarding wire transfers. This paper provides information relevant not just for those directly establishing and communicating wiring instructions, but also for those just acting in an advisory role to the consumer during the transaction.

The paper includes best practices – sample procedures and communications gathered from franchises, brokers, title companies and others. It also includes a sample company policy Clareity Consulting has created based on both these best practices and on our experience advising brokerages and other companies regarding information security. Not all aspects of this paper will apply to all companies involved in the transaction, and what is relevant will still need to be tailored to an individual company’s business processes. Still, it provides a valuable starting place toward reducing the risk of wire fraud.

Please download the paper here:

Reducing the Risk of Real Estate Wire Fraud

MLSs Win with Their Business Rules in RETS

For the past few months I’ve been a bit quieter than usual on the blog because I’ve been working on a number of time-consuming projects including MLS regionalization, MLS selection, strategic planning sessions, and information security audits. But one very interesting project that has been taking some of my time has been an opportunity to work toward a standard for expressing business rules inside RETS. The focus so far has been mostly on listing input business rules, but that focus could expand in the future. This project should be of interest to every MLS, and I strongly encourage MLSs to participate in the ongoing process at RESO.

I first submitted the value proposition for this effort to RESO as part of a business case worksheet back in April of 2010: “MLSs with well documented business rules can more efficiently and smoothly move to a new MLS system, add additional front ends with full functionality or integrate other software that requires use of business rules – without manual work and often inaccurate results. This will result in smoother conversions, more software choice, and enhanced competition and innovation.” At that point it wasn’t prioritized but in late 2015 I was asked by the RESO Research and Development work group chair, Greg Moore, to lead the charge to come up with a standard for expressing business rules inside RETS.

As part of the process, I gathered knowledge to attack the problem by visiting with a number of MLSs. During that process, sometimes I saw things that made it even more clear how urgent it is to succeed in creating a standard – one that can be understood by MLS staff and not just technical people – and getting it adopted. For example:

- At several MLSs, when I unpacked what their vendor had ‘coded’ as their listing input business rules into plain English, we found implementation errors. “That’s not how our rule is supposed to work,” became a common refrain during several of my visits.

- At one MLS, I saw a ‘botched’ calculated ?eld that had been that way for years, simply because no one – not an analyst or an MLS staff person – could review the programmer’s work, looking at it in terms of the business rule that drove the calculation.

Also, because there’s no common way of expressing these rules, it’s hard for MLSs to talk about them, establish best practices, and discuss key differences in how data is validated during regionalization discussions.

Right now, the effort to come up with a standard for expressing business rules inside RETS is a work in progress, but it is moving quickly. So far, the group has agreed to continue down the path of using a well-established business rule language called RuleSpeak and developing a short-hand for the rules expressed in RuleSpeak in what we call REBR (Real Estate Business Rules) Notation. The RuleSpeak structured English notation is perfect for clearly and unambiguously expressing business rules, even complex ones, in non-technical language using business vocabulary. Expressions that MLS non-technical staff can read and validate are the single source of truth when it comes to business rules. Everything else is mediated by someone who is not the business owner, so errors can happen along the way. Following are just a few common RuleSpeak examples. Note that most examples use RETS Data Dictionary names for fields – but I could just as easily have used more user-friendly MLS field labels.

- An Expired Listing must accept user input up to 15 days after Expiration Date.

- A Closed Listing must not accept user input. Enforcement: MLS Staff may override this. Data field GarageSpaces must have a value if GarageYN has a value of “Y”. ListingContractDate must be on or before today’s date.

- YearBuilt must be on or after 1700.

- ParkingTotal must be greater than or equal to the value of RentedParkingSpaces ListPrice must be greater than or equal to 1, and less than or equal to 50,000,000

- Status of an Active Listing of Residential Property Type may only change to one of the following:“Active”, “Cancelled”, “Extended”, “Under Agreement”, “Temporarily Withdrawn”. Enforcement: MLS Staff may override.

- Listing Status must be set to Expired on the Expiration Date if Current Listing Status is not Expired, Pending, Sold, or Leased.

Following is an example of a rule in both RuleSpeak and REBR Notation:

| Rule stated in RuleSpeak “Structured English” | Same Rule using REBR Notation |

| An Expired Listing must accept user input up to 15 days after Expiration Date. | ALLOW_EDIT LISTING YES IF ListingStatus is Expired AND TODAY = ONORBEFORE (Expiration Date + 15 DAYS) ENDIF |

None of this language is finalized yet: this is just research happening inside a business rules sub-group of the RESO Research & Development (R&D) group – but hopefully readers will see how valuable all of this can be to them and we’ll see more participation in this part of RESO. If you want to get involved in the group, please email Jeremy Crawford and ask to be added to the business rules group. If you already belong to RESO, whether or not you are in the work group, you can just log into the RESO collaboration system and get involved with the discussions there too.

MLS Regionalization: Beyond the Needs of Any One MLS

It’s Not Just About Overlapping Market Disorder

I was talking the other day with my friend Kevin McQueen about MLS consolidation and regionalization. Both of us help MLSs through the process, and we like to talk and share our experiences with each other in order to help our clients better and move the industry forward. One of the challenges we discussed the other day was that, for MLS regionalization to gain momentum, MLS leadership at every MLS in the country – including boards of directors – need to better understand the need for MLS consolidation and regionalization. Many don’t attend industry conferences and are not aware of the larger strategic issues driving it. When Clareity Consulting discusses the strategic reasons for MLS regionalization, we often focus on the following objectives: 1. Reduction in cost 2. Improvement in MLS product / service scope 3. Associations can focus more on association functions 4. Reduction / elimination of arbitrary information and system barriers o Reduction of need for multiple memberships o Reduction of number of data feeds for participants’ information systems / websites o Providing more comprehensive and accurate statistics for overlapping market areas o Providing wider listing exposure for sellers 5. Reduction of the number of systems some members need to learn 6. Improvement of MLS rule and data accuracy compliance, providing uniform rules and enforcement 7. Providing efficiency for participants who want to be involved with governance / committees [Update: these days I have even more goals, including driving technology provider interest in our industry.] Many MLSs evaluating regionalization on their own initially consider only one or two of these objectives – for example, reduction in cost, or elimination of the arbitrary information barriers – and don’t evaluate the decision against all the items listed above. As a result, they might conclude something like, “We’re geographically isolated so we don’t need to consider regionalization.” But even the list of objectives above is incomplete, focusing on local and regional needs rather than the larger threats facing the MLS industry. If one looks at the NAR’s D.A.N.G.E.R. Report, one should consider how the current splintered MLS industry is – or is not – ready to deal with the threats detailed in that report, including, but not limited to: A more consolidated MLS industry would be better able to mitigate these risks. Back to my conversation with Kevin. He asked, “How do we reach executives and board members at association/MLSs that are resistant or uninformed about the possibilities for regionalization?” Kevin suggested one way was that we could speak on the subject more at conferences. But, so many of the people who need to be reached don’t attend these conferences, and certainly wouldn’t attend a session on regionalization if they’ve already made up their mind on the subject. We also discussed NAR mandating NAR- or CMLS-developed best practices for MLSs. While the core standards approach NAR took with associations could be useful, it leads to a very slow, incremental approach that may have been appropriate 20 years ago but is too slow to meet today’s challenges. Based on the MLS regionalization end-game described in my recent Inman article, NAR could simply mandate standards for MLS that do meet the condition of the end-game and initiate a fast process to get us there. But is a top-down mandate approach the best one? Kevin and I both believe that the best approach is a collaborative one, where association and MLS leadership engage in a consensus-driven process for regionalization. Clareity recently outlined this process recently in an Inman News article, republished here: “MLS Regionalization – Breaking Through” Are the threats to the industry and the benefits of MLS regionalization becoming clear enough that initiative momentum will radically increase? Will leaders take an active role in designing the best possible future for their organizations and the industry at large? Or will they continue to focus on their own organization and ignore what is ultimately best for their members and the industry? Or will they wait for one of the worse threats from the D.A.N.G.E.R. Report to occur and make all of this irrelevant?

MLS Regionalization: Breaking Through

In part one of this article ("MLS Regionalization: Setting the Goal") Clareity outlined the criteria for determining the future “end game” for MLS consolidation. In this part, we will describe Clareity’s process for MLS consolidation and regionalization and how we overcome some of the common objections to consolidation during that process.

A good process for MLS consolidation and regionalization has four parts: planning, decisions, formalizing decisions, and actualization:

1. PLANNING

In the first part, planning, organizational leaders meet with a facilitator who can drive consensus on the hard issues, including goals, ownership and governance, money flow, leadership, staffing, and the product and service offerings. The facilitator provides examples of how decisions in these areas have worked in other organizations and captures the group’s consensus in a document which all participants approve of, so there is no backtracking later. The leaders may consult with their boards of directors during this phase and work to sell the consensus plan. There are other decisions that will need to be made along the way, such as specific technologies, but the above decisions are the one that will set the framework for the long term, while technologies come and go. Some groups want to focus on cost right away, but how can cost be discussed when no decisions have been made yet about the factors that drive it – leadership, staffing, products, and services? And how can one make decisions about those things until a decision-making structure has been put in place? A successful planning process is all about asking the right questions at the right time.

2. DECISIONS

In the decision-making phase, the leadership of all stakeholder organizations meet together to discuss areas still lacking consensus. Having group meetings is an important part of the process because it is an opportunity to address many remaining fears, ensuring all valid issues are on the table. The facilitator can provide perspective and knows how to address common objections. The group must have trust in the process, building trust that they are all working toward a common goal: a better MLS that serves all of the subscribers in the region well. In this phase, the group can make more definitive decisions based on the initial planning, which the facilitator captures.

3. FORMALIZING DECISIONS

Next, the facilitator will use the documentation created in the previous step as the basis of a business plan. All of the planning and decisions will be incorporated into this document. A draft budget, a plan for the next steps, and a timeline for regionalization will be developed and included as well.

4. ACTUALIZATION

The final step, actualization, involves creating the company, addressing all of the legal issues, commencing initial and ongoing communications, selecting technology and contracting (or re-negotiating) as needed, and implementing MLS system changes as needed. Having top-notch legal counsel is critical in this phase, and Clareity Consulting likes to collaborate with the best in the business.

OVERCOMING OBJECTIONS

There are usually many questions and fears about MLS regionalization that must be addressed along the way. Sometimes agents worry that competitors from the adjoining MLS will sell out of their traditional area and create problems, and they need to be reassured that this has not been a serious issue in regional MLSs that have formed in the past. Other times, MLS executives and staff fear for their jobs, or board members worry about the continuation of their leadership roles –worries that can be addressed by discussing the role of service centers in the new organization and creating a plan for merging leadership. Some will worry about strife between associations in a regional MLS but having strong bylaws and intellectual property agreements can minimize that risk. Revenue traditionally shared with the association can also be a concern that can be addressed in a variety of ways and Clareity’s CEO, Gregg Larson, described one such approach at Clareity’s MLS Executive Workshop. The point is that common concerns about MLS regionalization can be addressed as a part of the process, and such concerns shouldn’t stop the process from happening.

SUCCESS

With a sound process and proper facilitation, organizations working together can demystify and accomplish MLS consolidation and regionalization. Once fears are put aside and the MLSs commit to engaging in the process, it is generally possible to address stakeholder issues and concerns, achieving the goal of having a single MLS with strong capabilities that covers an appropriate geographic area.

MLS Regionalization: Setting the Goal

Why are there still so many MLSs? I’d argue it’s mainly because we don’t have the answer to other questions: How many MLSs should there be, and where are their borders? Should there be six MLSs? 30? 60? 100? One? Can anyone be held to account for not meeting a goal that has not been set? Before we consider how to achieve a goal that will enable consolidation, we need to know what that goal – the “win condition” – is.

Clareity Consulting is studying the MLS regionalization “win condition”. We believe that the industry first needs to understand what the consumer considers to be a natural market area. If someone gets a job in Manhattan, New York City, they may end up living in a house in that borough (2 MLSs), one of the outer boroughs or Long Island (several other MLSs), take the train up to Westchester or Connecticut, or out to New Jersey (even more MLSs). How can an agent serve his or her customer when he or she can’t set up a single prospect search in the MLS system, since the data is spread out over nearly a dozen MLSs? The situation is even worse when MLS geographies overlap, or a property is on the border of more than one MLS. In this situation, agents can’t find all the CMA “comps” they need in one system. If an MLS doesn’t cover the natural market area – including overlapping and adjoining areas – it is doing its subscribers and their clients a tremendous disservice. How can that be justified in today’s world where real estate portals have no boundaries and consumers are free to search everywhere?